The implications of ChatGPT on customer experience

10 January 2023

26/2/2023: This article has been republished on VentureBeat.

OpenAI opened the ChatGPT beta in late November 2022, in a move that produced the most powerful natural language processing (NLP) AI model to date, quickly going viral and attracting a million users within the first 5 days.

TL,DR; this new development is a big deal, and it will affect every single industry. We’ll share our thoughts on specific industry impacts in a series of articles over the coming weeks, but the focus of this thought piece is the impact of ChatGPT on Customer Experience (CX).

Will the rise of models like ChatGPT completely replace chatbots?

The underlying premise of this question is that large language models (LLMs) like ChatGPT transform the reputation of chatbots from clunky, impersonal and faulty into algorithms so meticulous that (a) human interaction is no longer needed, and (b) traditional ways of building chatbots are now completely obsolete. We’ll explore these premises and give our view on how ChatGPT will impact the CX space.

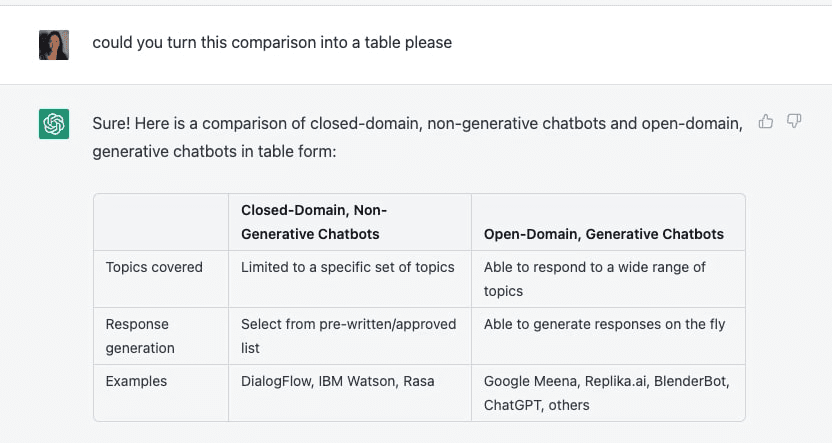

Broadly speaking, we differentiate between conventional chatbots and chatbots like ChatGPT built on generative LLMs.

Conventional chatbots: this covers most chatbots you’ll encounter in the wild, from chatbots to check the status of your DPD delivery to customer service chatbots for multinational banks, built on technologies like DialogFlow, IBM Watson, or Rasa. They are limited to a specific set of topics, are not able to respond to inputs outside of those topics (closed-domain), and can only produce responses that have been pre-written or pre-approved by a human (non-generative).

LLM-based chatbots: these are able to respond to a wide range of topics (open-domain) and are able to generate responses on the fly, rather than just selecting from a pre-written list of responses (generative). This includes Google Meena, Replika.ai, BlenderBot, ChatGPT, and others.

LLM-based chatbots and conventional chatbots fulfil somewhat different purposes, and for many applications in CX, the open nature of LLMs is less help and more hindrance when building a chatbot that can specifically answer questions about your product or help a user with an issue they’re experiencing.

Realistically, LLMs won’t simply be let loose into the CX domain tomorrow; in reality, it’ll be much more nuanced. The name of the game will be marrying the expressiveness and fluency of ChatGPT with the fine-grained control and boundaries of conventional chatbots. This is something that chatbot teams with a research focus will be best suited for.

Where can you already use ChatGPT today when creating chatbots?

There are many parts of Chatbot creation and maintenance that ChatGPT is not suited for in its current state, but these are some areas is already well-suited for:

Brainstorming potential questions and answers for a given closed domain, either on the basis of its training data, or fine-tuned on more specific information - either by OpenAI releasing the ability for fine-tuning when ChatGPT becomes accessible by API, or through including desired information via prompt engineering.

Caveat: It is still difficult to know with certainty where a piece of information comes from, so this development process will continue to require a human-in-the-loop to validate output.

Training your chatbot. ChatGPT can be used to paraphrase questions a user might ask, particularly in a variety of styles, and even generate example conversations, thereby automating large parts of the training.

Testing & QA. Using ChatGPT to test an existing chatbot by simulating user inputs holds much promise, particularly when combined with human testers. ChatGPT can be told the topics to cover in its testing with different levels of granularity, and like in generating training data, the style and tone it uses can be varied.

We see the next generation of CX chatbots continue to be based on conventional, non-generative technology, but to involve generative models heavily throughout the creation process.

Chatbots are set to level up the current CX space

The key impact of LLMs on consumer expectations will be more visibility of chatbots, an increased urgency to incorporate them into CX, a heightened reputation of chatbots, and a higher standard. In other words, chatbots are getting a glow-up!

We’ve all experienced them – clunky chatbots with extremely limited dialogue options that churn out painfully robotic lines (if they can even understand anything at all). While poorly performing chatbots are already on the way out, standards will now be shooting through the roof to avoid this experience, and the shift from human to AI will rapidly continue.

A recent report predicts that the number of interactions between customers and a call centre handled by AI will increase from 2% in 2022 to more than 15% by 2026, and double to 30% by 2031. Given the rapid adoption of AI over the past 3-5 years and the exponential advancements in AI, we realistically anticipate these growth numbers to be far more significant.

Brands like Lemonaid, Oura, AirBnb or ExpressVPN have paved the way for excellent 24/7 support; so much so that today’s customers now simply expect a seamless experience.

And the consequences of missing out on delivering great service are no joke. Poor service can have a significant impact on a brand’s retention rates, causing would-be buyers to look elsewhere: According to Forbes, bad customer service costs businesses a combined $62 billion each year.

ChatGPT is certainly in a hype phase, but there are significant risks in using it as-is right now. We believe that the majority of the current risks result from ChatGPT’s unpredictability which creates reputational, brand and legal concerns. Whilst the buzz around ChatGPT is good, you must not forget its associated risks and the importance of selecting the right partner to avoid any pitfalls.

In particular we see the following risks to big business adopting LLMs directly within their customer journey:

Harm to brand image - sharing of offensive content

Misleading customers - sharing false content

Potential for adversarial attack - people trying to break the chatbot to damage reputations

False creativity - users mistaking the “stochastic parrot” for genuine human creativity / connection

False authority - ChatGPT produces authoritative sounding text which humans are notoriously bad at refuting.

Data security and data ownership and confidentiality - OpenAI has insight and access to all data shared via ChatGPT, hence opening huge risk floodgates for confidentiality breaches.

In other terms: “Just because you can, it doesn’t mean you should”

Startups and organisations will inevitably try to introduce safeguards and other measures to mitigate some of these risks. However, a lot of companies, including many of those which we work with, still want (or are legally obliged to) to retain full control of the content – our legal and FCA-regulated clients are a good example. Fundamentally, with generative LLMs like ChatGPT retaining full content control is impossible. (We will have another article expanding on this topic soon – watch this space!)

When it comes to the chatbot development itself, players using open-source stacks like Rasa (the framework we most enjoy working with at Springbok) or Botpress will have the advantage of agility due to the flexibility and versatility these open systems enable. In the short to medium term, chatbot developers with experience in NLP and using LLMs will be the ones to bring this technology to the chatbot market, because they are able to effectively leverage and fine tune the models to their (or their clients’) needs and use cases.

In the long-term, small companies will continue to be better positioned to swiftly implement changes than large, established platforms like ChatGPT. Amidst the current financial market volatility, however, we anticipate a potential market consolidation of players in the next 12-24 months, with the larger players acquiring smaller players, and – a common occurrence in the chatbot space – clients buying their chatbot suppliers.

Which industries will adopt ChatGPT in their CX processes first?

Despite only being in the beta and there being no API available, there have been a myriad of exciting use cases published by individuals, including a number of browser extensions, mainly via Twitter.

As long as ChatGPT is released to the public (we currently expect a volume-based pricing model like with previous models like GPT-3), small players will continue to be the ones pushing the boundaries with novel applications.

Having experimented with some of the potential application areas in chatbots of GPT-3 and now ChatGPT, we at Springbok are excited to use and continue using models like ChatGPT as a part of our chatbot development processes going forward.

Get in touch to discuss chatbots and ChatGPT in the context of your business today at victoria@springbok.ai

This article is part of a series exploring ChatGPT and what this means for the chatbot industry.

Other posts

Linklaters launches AI Sandbox following second global ideas campaign

Linklaters has launched an AI Sandbox which it says will allow the firm to quickly build out AI solutions and capitalise on the engagement and ideas from its people.

Linklaters staff pitch 50 ideas in supercharged AI strategy

Linklaters has announced the launch of an AI sandbox, a creative environment that will allow AI ideas to be brought, built and tested internally.

Linklaters joins forces with Springbok for its AI Sandbox

Global law firm Linklaters has launched an ‘AI Sandbox’, which will allow the firm to ‘quickly build out genAI solutions, many of which have stemmed from ideas suggested by its people’, they have announced.